Ars examines the business of bandwidth starting with the forgotten—latency.

Bandwidth—the number of bits per second that a device or connection can transfer every second—is the number that everyone loves to talk about. Whether it be the gigabit per second that your Ethernet card does, boasting about your fancy new FTTP Internet connection at 85 megabits per second, or bemoaning the lousy 128 kilobits per second you get on hotel Wi-Fi, bandwidth gets the headlines.

Bandwidth isn't, however, the only number that's important when it comes to network performance. Latency—the time it takes the message you send to arrive at the other end—is also critically important. Depending on what you're trying to do, high latency can make your network connections crawl, even if your bandwidth is abundant.

Why latency matters

It's easy to understand why bandwidth is important. If a YouTube stream has a bitrate of 1Mb/s, it's obvious that to play it back in real time, without buffering, you'll need at least 1Mb/s of bandwidth. If the game you're installing from Steam is about 3.6GB and your bandwidth is about 8Mb/s, it will take about an hour to download.

Latency issues can be a bit subtler. Some are immediately obvious; others are less so.

Nowadays, almost all international phone calls are typically placed over undersea cables, but not too long ago, satellite routing was common. Anyone who's used one or seen one on TV will know that the experience is rather odd. Conversation takes on a disjointed character because of the noticeable delay between saying something and getting acknowledgement or a response from the person you're talking to. Free-flowing conversation is impossible. That's latency at work.

There are some applications, such as voice and video chatting, which suffer in exactly the same way as satellite calls of old. The time delay is directly observable, and it disrupts the conversation.

However, this isn't the only way in which latency can make its presence felt; it's merely the most obvious. Just as we acknowledge what someone is telling us in conversation (with the occasional nod of the head, "uh huh," "go on," and similar utterances), most Internet protocols have a similar system of acknowledgement. They don't send a continuous never-ending stream of bytes. Instead, they send a series of discrete packets. When you download a big file from a Web server, for example, the server doesn't simply barrage you with an unending stream of bytes as fast as it can. Instead, it sends a packet of perhaps a few thousand bytes at a time, then waits to hear back that they were received correctly. It doesn't send the next packet until it has received this acknowledgement.

Because of this two-way communication, latency can have a significant impact on a connection's throughput. All the bandwidth in the world doesn't help you if you're not actually sending any data because you're still waiting to hear back if the last bit of data you sent has arrived.

How latency works

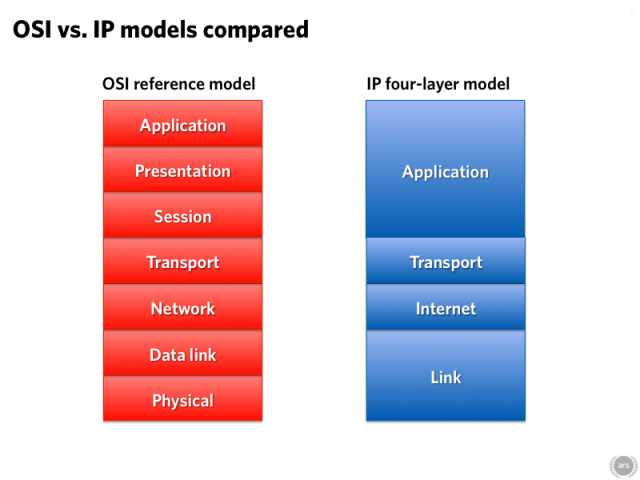

It's traditional to examine networks using a layered model that separates different aspects of the network (the physical connection, the basic addressing and routing, the application protocol) and analyze them separately. There are two models in wide use, a 7-layered one called the OSI model and a 4-layered one used by IP, the Internet Protocol. IP's 4-layer model is what we're going to talk about here. It's a simpler model, and for most purposes, it's just as good.

Sometimes c just isn't enough

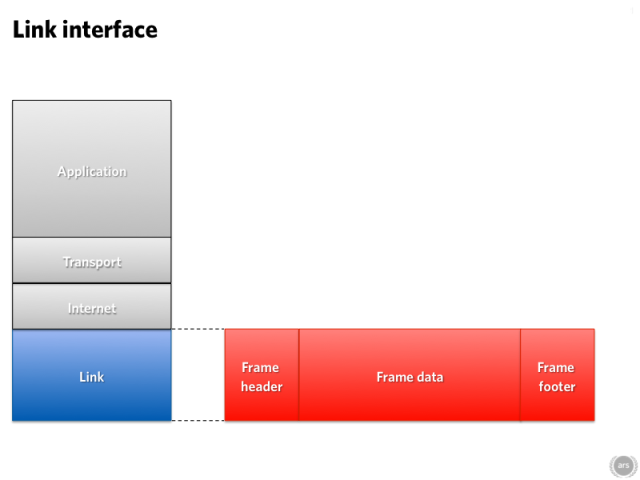

The bottom layer is called the link layer. This is the layer that provides local physical connectivity; this is where you have Ethernet, Wi-Fi, dial-up, or satellite connectivity, for example. This is the layer where we get bitten by an inconvenient fact of the universe: the speed of light is finite.

Take those satellite phones, for example. Communications satellites are in geostationary orbits, putting them about 35,786 kilometers above the equator. Even if the satellite is directly overhead, a signal is going to have to travel 71,572 km—35,786 km up, 35,786 km down. If you're not on the equator, directly under the satellite, the distance is even greater. Even at light speed that's going to take 0.24 seconds; every message you send over the satellite link will arrive a quarter of a second later. The reply to the message will take another quarter of a second, for a total round trip time of half a second.

Undersea cables are a whole lot shorter. While light travels slower in glass than it does in air, the result is a considerable improvement. The TAT-14 cable between the US and Europe has a totalround trip length of about 15,428 km—barely more than a fifth the distance that a satellite connection has to go. Using undersea cables like TAT-14, the round trip time between London and New York can be brought down below 100 milliseconds, reaching about 60 milliseconds on the fastest links. The speed of light means that there's a minimum bound of about 45 milliseconds between the cities.

The link layer can have impact closer to home, too. Many of us use Wi-Fi on our networks. The airwaves are a shared medium: if one system is transmitting on a particular frequency, no other system nearby can use the same frequency. Sometimes two systems will start broadcasting simultaneously anyway. When this happens, they have to stop broadcasting and wait a random amount of time for a quiet period before trying again. Wired Ethernet can have similar collisions, though the modern prevalence of switches (replacing the hubs of old) has tended to make them less common.

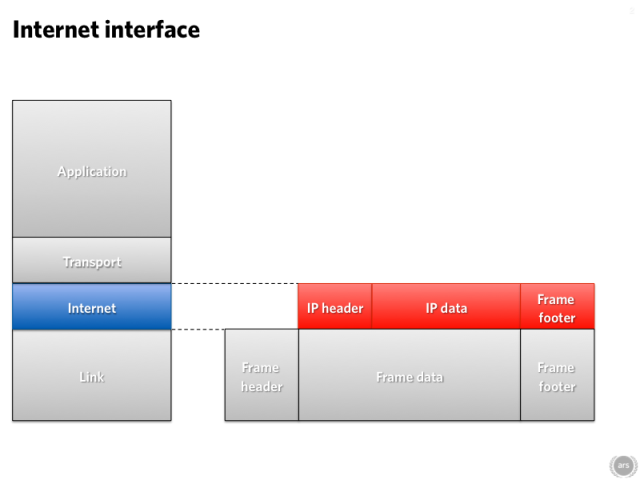

The Internet is an internetwork

The link layer is the part that moves traffic around the local network. There are usually lots of links involved in using the Internet. For example, you might have home Wi-Fi and copper Ethernet to your modem, VDSL to a cabinet in the street, optical MPLS to an ISP, and then who knows. If you're unlucky, you might even have some satellite action in there. How does the data know where to go? That's all governed by the next layer up: the internet layer. This links the disparate hardware into a singular large internetwork.

The internet layer offers plenty of scope for injection of latency all of its own—and this isn't just a few milliseconds here and there for signals to move around the world. You can get seconds of latency without the packets of data going anywhere.

The culprit here is Moore's Law, the rule of thumb stating that transistor density doubles every 18 months or so. This doubling has the consequence that RAM halves in price—or becomes twice as large—every 18 months. While RAM was once a precious commodity, today it's dirt cheap. As a result, systems that once had just a few kilobytes of RAM are now blessed with copious megabytes.

Normally, this is a good thing. Sometimes, however, it's disastrous. A widespread problem with IP networks tends more to the disastrous end of the spectrum: bufferbloat.

Network traffic tends to be bursty. Click a link on a webpage and you'll do lots of traffic as your browser fetches the new page from the server, but then the connection will be idle for a while as you read the new page. Network infrastructure—routers, network cards, that kind of thing—all has to have a certain amount of buffering to temporarily hold packets before transmitting them to handle this bursty behavior and smooth over some of the peaks. The difficult part is getting those buffers the right size. A lot of the time, they're far, far too big.

That sounds counter-intuitive, since normally when it comes to memory, bigger means better. As a general rule, the network connection you have locally, whether wired or wireless, is a lot faster than your connection to the wider Internet. It's not too unusual to have gigabit local networking with just a megabit upstream bandwidth to the 'Net, a ratio of 1,000:1.

Thanks again to Moore's Law (making it ridiculously cheap to throw in some extra RAM), the DSL modem/router that joins the two networks might have several megabytes of buffer in it. Even a megabyte of buffer is a problem. Imagine you're uploading a 20MB video to YouTube, for example. A megabyte of buffer will fill in about eight milliseconds, because it's on the fast gigabit connection. But a megabyte of buffer will take eight seconds to actually upload to YouTube.

If the only traffic you cared about was your YouTube connection, this wouldn't be a big deal. But it normally isn't. Normally you'll leave that tediously slow upload to churn away in one tab while continuing to look at cat pictures in another tab. Here's where the problem bites you: each request you send for a new cat picture will get in the same buffer, at the back. It will have to wait for the megabyte of traffic in front of it to be uploaded before it can finally get onto the Internet and retrieve the latest Maru. Which means it has to wait eight seconds. Eight seconds in which your browser can do nothing bit twiddle its thumbs.

Eight seconds to load a webpage is bad; it's an utter disaster when you're trying to transmit VoIP voice traffic or Skype video.

No comments:

Post a Comment